Over the winter holiday break (on Christmas Eve, to be precise), Scott Hanselman and I released the next volume in the Imposter’s Handbook series. It took us just over 18 months to put this thing together and I couldn’t be happier with it.

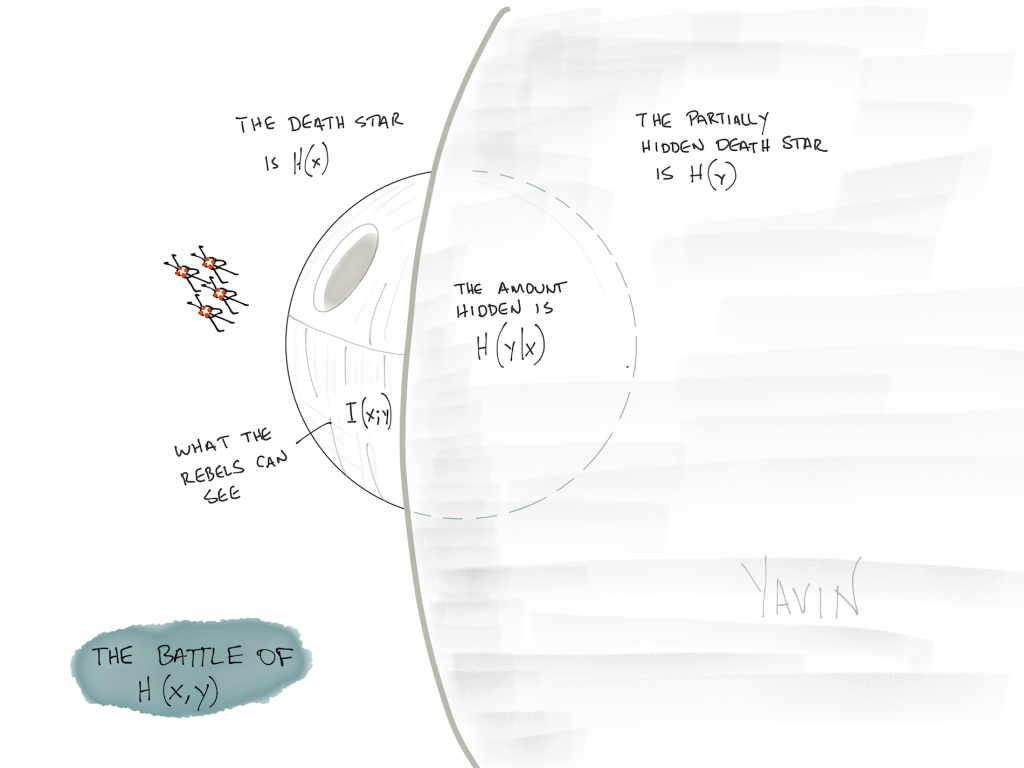

Shannon’s Second Theorem, Illustrated via Deathstar

From Logic to Boolean Algebra, Binary to Circuits

There was so much content I had to ax from the first volume of The Imposter’s Handbook because I ran out of time and space. For instance: I knew very, very little about binary operations and even less about encryption and I desperately wanted to change that. Unfortunately, it had to wait until I had the time.

That time came over the last 18 months. Scott and I dove into things like binary addition and subtraction, logic gates, and Boolean Algebra.

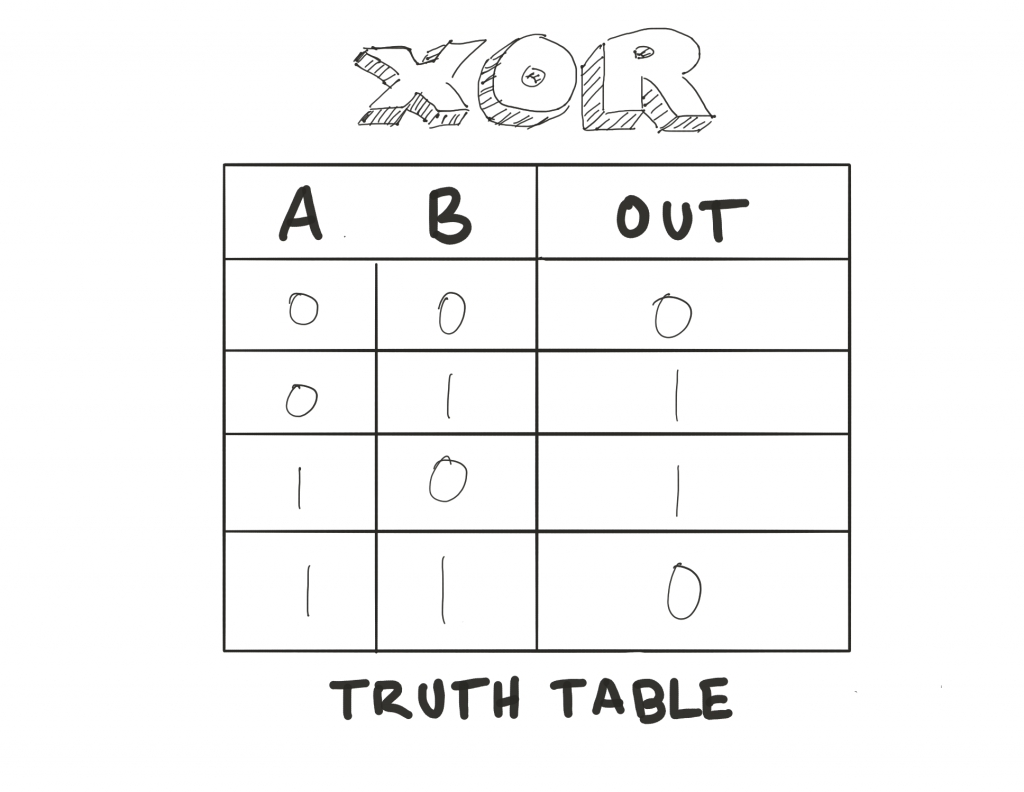

Something I could never remember: XOR

It was fun to dive into these subjects but I was not expecting what came next. Even writing this now – I’m struggling to come up with a way to accurately capture the singular importance of one person’s work. He’s been compared to Einstein, Edison, Newton – all rolled into one.

Claude Shannon and Information Theory

Claude Shannon created Information Theory with a single paper written in 1948. In it, Shannon detailed a way to describe information digitally, that is with 1s and 0s. He detailed how this information might be transmitted in a virtually lossless way and, as if that wasn’t enough, he also described just how much information that digital signal could contain.

We take this kind of thing for granted today, but keep in mind that in Shannon’s day, the only way to communicate over great distances was with telegraph or telephone!

But wait, there’s more!

Prior to inventing Information Theory, Shannon wrote a master’s thesis that many people regard as the most important master’s thesis ever written.

The Creation of Digital Circuits

In the late 1930s, Claude Shannon was working on his master’s degree at MIT. He was also working with Vannevar Bush on the Differential Analyzer, a room-sized mechanical computer that would calculate differential equations typically for military use.

The Twin Cambridge University Differential Analyzer (Public Domain)

This machine had to be programmed by hand, which meant breaking it down and rebuilding it, using rods, wheels, and pullies as variables in a complex ballistic equation. Eventually, many of the mechanical bits were replaced with electrical switches that moved levers here and there, reducing the time it took to break the machine down and rebuild it.

These switches sparked something in Shannon, who recalled a class he took at the University of Michigan called Boolean Algebra. Turns out, this chap named George Boole figured out a way to apply mathematical principles to simple logical propositions. Shannon extended those ideas and figured out how the entire room-sized computer could be replaced with a series of electrical circuits.

Claude Shannon invented the digital circuit.

Encryption, Hashing and Blockchain

The story kind of wrote itself from that point on. Digital circuits led to digital computers which led to more efficient ways of calculating things which led to the need to transmit that information which led to the need to keep it a secret which led to where we are today.

Scott and I dove into all of this.

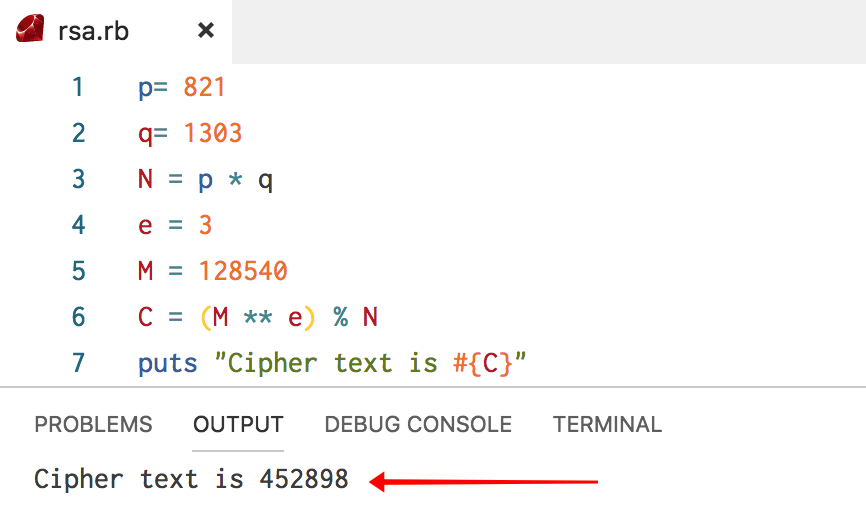

Cracking a simple asymmetric cipher

We explore SSH keys and how RSA works. We dive into hashes, discussing the goods and bads of each – including how Rainbow Tables can be used to quickly and easily crack credit card information that hasn’t been salted.

Simplified doodle of SHA256

We eventually end up with a discussion of cryptocurrency and blockchain, detailing why some people love it and others absolutely hate it. Both sides have some pretty good points…

It was a ton of fun writing this book – made even moreso because I got to do it with a friend! I really hope you enjoy it!